Table of Contents

Lirec Architecture

Part of Project Lirec

The technology developed for Lirec is shared between the research partners, and has to:

- Run on very different platforms

- Reuse code across these platforms

- Support migration at runtime between platforms

Existing robot architectures

In the whole, there has historically been a lack of sharing of this kind of technology. This is partly because generalising is hard, considering all types of robots and implementations possible. However, Lirec has to generalise as it's using a wide variety of platforms and needs to share as much as possible.

While some existing architectures are available, none of them address the needs of Lirec, given the focus is:

- Long term companionship rather than solving a purely technical problem

- Working across different platforms

Methodology

In the past there have been 2 broad approaches to robot design:

- Hierarchical, model based planning = expensive/difficult to maintain an accurate world state model

- Behavioural approach = less state, local decisions, but liable to local minima and opaque to program

This can be summed up as “predictive vs reactive”.

The plan is to use a hybrid approach, example BIRON. Where the high level predictive element constrains the reactive in order to combine local decisions with a world model.

The architecture will consist of 3 layers of abstraction:

- Level 1 - The device api layer, the existing hardware drivers

- Level 2 - Logical mappings of devices into competencies

- Level 3 - ION, platform independent

Level 2 Architecture

Level 2 will provide a reference architecture with modular capabilities called competencies. Not all competencies will make sense for all platforms, and different implementations of the same competency will be needed for different platforms.

Competencies table

| Actuation | Sensing | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Speech/Sound | Visual | Movement | Object Manipulation | Identification | Vision | Sounds | Positioning | Distance | Internal State |

| Text to speech | Gesture execution | Move limb | Grasp/Place object | User recognition | Face detection | Speech recogn | Localization | Obstacle avoidance | Battery status |

| Non-verbal sounds | Lip sync | Follow person | Obj recognition | Gesture recognition (simple set) | Non-verbal sounds | Locate person | Locate obj | Competence execution monitoring | |

| Facial expression | Path planning | Emotion recognition (simple set) | User proximic distance sensing/control | ||||||

| Gaze/head movement | Body tracking | ||||||||

| Expressive behaviour | |||||||||

Some competencies are dependant on others, such as facial expression which will require a face detection competency to operate. The computer vision competencies are to be concentrated on at first.

Level 3 Architecture

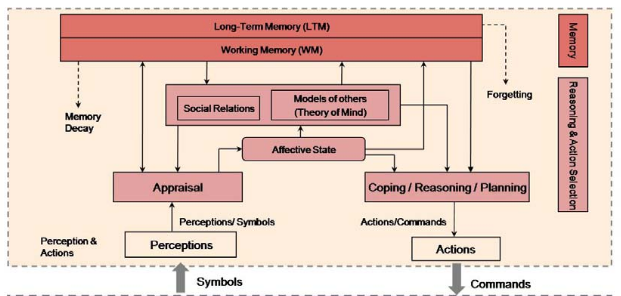

Appraisal

Appraisal is the process of mapping input events to affective changes - how the agent's current “emotional state” should react to things happening in the outside world. The exact nature of this mapping forms an important part of the agent's perceived personality.

Coping/Reasoning/Planning

Similar to appraisal, but in reverse - what should be done given the agent's current affective state, along with it's current tasks etc.

Affective State

The affective state is the emotional state of the agent, it needs to influence all decision making the agent carries out, whether it's part of the long term planning strategy, or the more reactive decisions.

As the affective state feeds into the appraisal, which in turn feeds into the affective state, a feedback mechanism is created, which helps the agent adapt to changing events.

Social Relations

This is where the agent models the relationships it has with other agents and humans, and the relationships other agents/humans have with it, and how they change.

The agent also needs an idea of how it's actions will change these relationships, and importantly, to learn from past events and relationships. This is stored in the agent's memory.

Models of others (Theory of Mind)

This is where the information regarding the agents and humans that the agent has met are stored. Each model will consist of a similar architectural form as the agent itself. This means that in order to estimate what another agent will think of an action, it can run an appraisal using it's model of the other agent, and look at the changes to it's affective state. The idea is that as more information is gathered (the more the agent gets to know it's user) the better these estimates will become.

The Lirec model is only recursive to one level, i.e. it does not attempt to model other agent's models of others.

Long term memory

The long term memory is where the agent stores it's information on it's history of interactions with the user and other agents. This memory should be linked to all the decision making in the agent.

Short term memory

Where the agent keeps it's current goals, plans and action rules.